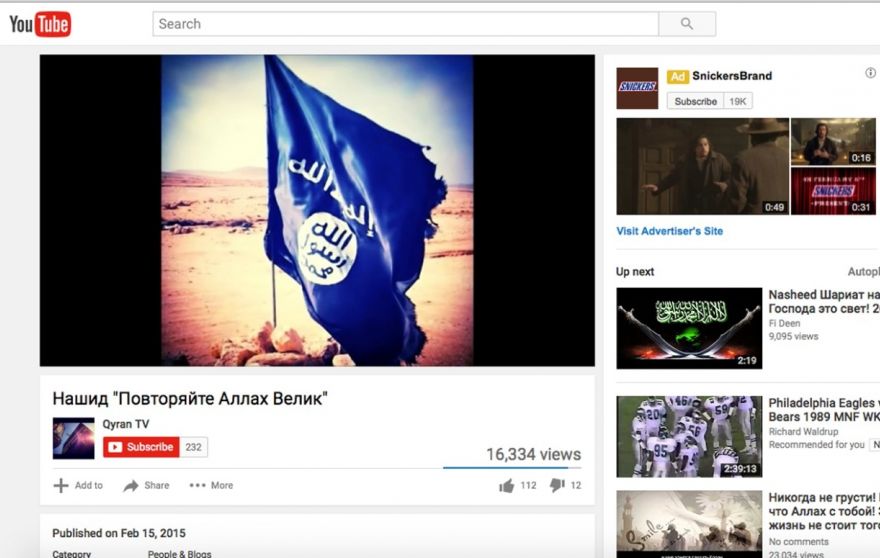

On Super bowl day, companies paid up to $5 million for 30 seconds to be seen by the right demographic. Meanwhile on Youtube, the same ads are pre-rolls to terror-linked videos. This years Superbowl ads from brands like Budweiser Hyundai ad, are pre-rolls on terror-linked video channels such as wowlwowl and Qyran TV. Sermons like “Nasheeds”, which are aimed at radicalizing a fresh crop of jihadists, carry "you're not you when you're hungry" Snickers ads. Youtube has an automated system that shotguns ads onto all sorts of channels, and Youtube is very bad at weeding out seriously objectionable content automatically, which is how you get such bizarre combinations of brands and jihadists.

Hyundai have stated they will "Talk to YouTube to see what we can do to prevent this sort of thing happening in the future." The simplest thing they can do, is pull all of their advertising media budget from all Google media, right now. Regardless of pre-rolls on YouTube, even Adsense banners end up in shady places which we've previously reported on. There's seemingly no human vetting at all on any content that Google places advertising on. It's all keywords and huge margins of error.

Brands risk their reputation when their ads appear on the wrong content, if Kellogg's has to pull advertising from Breitbart due to "hate speech", imagine the situation when name brands are literally paying terrorist organisations via YouTube ads. "Raise one to Right Now" before a beheading video, Mr Clean "You gotta love a man who cleans" before a nasheed that preaches terror.

Eric Feinberg, CEO of GIPEC, a company which monitors where brands advertise online, sums it up:

“The advertising community needs to take responsibility, go to YouTube, and threaten to pull their ads.”

Youtube has a simple rallying cry for anyone who wishes to join them in monetising their online content, “more views lead to more revenue,” they say. So when an ISIS recruitment video has several hundred thousands views, and a pre-roll ad, it's safe to assume that YouTube are paying the channel owner for that monetisation. When queried directly about specific channels, we've received no response from Youtube, but the channels vanish when pointed out to YouTube.

Of course, the community guidelines state that there shouldn't be terrorism related channels at all, and "hate speech" isn't tolerated either though what constitutes hate speech is ill-defined. According to Kellogg's and the ad network involved with Breitbart, that entire website is "hate speech", not just a right wing news site and pundits. In a similar vein, YouTube can come to demonetize any channels that are connected to fringe conservative news sources such as Breitbart and similar, since they post a majority of their content on YouTube.

YouTube regularly terminate accounts that violate their terms of service, I've witnessed this first hand when one reports an account for copyright infringement - three strikes and the account is gone automatically. When it comes to terror related videos, someone needs to flag it, much like any copyrighted content, so that it's brought to YouTube's attention. So exactly like copyright infringing parties, their accounts can exist and generate income for as long as someone doesn't report them. There are bots that start accounts and upload videos to them on youtube, so the end result is humans playing whack-a-mole with computer systems.

DMCA Safe Harbor laws may not protect YouTube in the case of actual money sent to real terrorist organisations, as Google writes checks or pay straight to bank accounts of anyone who has signed up to monetize their videos, which requires showing ID and signing tax forms. Crunching some numbers through the YouTube money calculator we found that some of these channels were generating thousands of dollars per day.

Until YouTube filters the content, they have no way of being able to flag ads from controversial videos. There's no filtering when videos are uploaded, and there's no human that checks channels after they have become established - everything is automated. When you buy advertising on YouTube you have the possibility of booking only Youtube masthead, but anything else is simply target (poorly) by keyword. It's high time advertisers leave that risk, and move their online spending elsewhere before their brand s forever associated with Syrian war images.